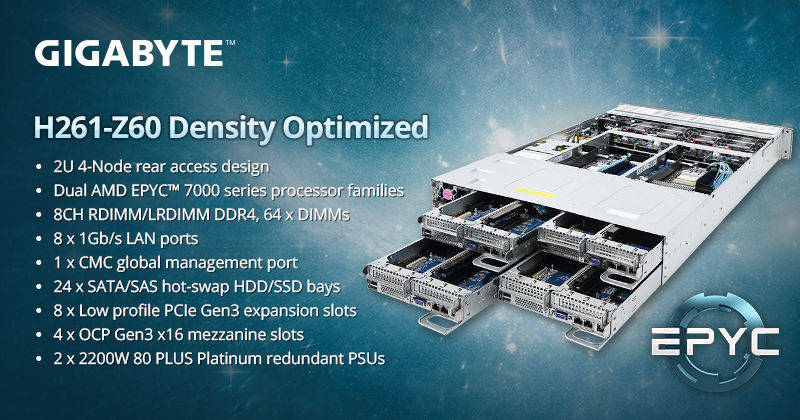

The H261-Z60 combines 4 individual hot pluggable sliding node trays into a 2U server box. The node trays slide in and out easily from the rear of the unit.

EPYC Performance

Each node supports dual AMD EPYC 7000 series processors, with up to 32 cores, 64 threads and 8 channels of memory per CPU. Therefore, each node can feature up to 64 cores and 128 threads of compute power. Memory wise, each socket utilizes EPYC’s 8 channels of memory with 1 x DIMM per channel / 8 x DIMMS per socket, for a total capacity of 16 x DIMMS per node (over 2TB of memory supported per each node ).

Maximum compute in this system can enable data center footprints to be reduced by up to 50% compared with a standard 1U dual socket server. And GIGABYTE has recently demonstrated that our server design is perfectly optimized for AMD EPYC by achieving one of the top scores of the SPEC CPU 2017 Benchmark for AMD EPYC single socket* & dual socket** systems.

* R151-Z30 achieved highest SPEC CPU 2017 performance benchmark for single-socket AMD Naples platform vs other vendors as of May 2018

** R181-Z91 achieved second highest SPEC CPU 2017 performance benchmark for dual-socket AMD Naples platform vs other vendors as of May 2018

Ultra-Fast Storage Support

In the front of the unit are 24 x 2.5” hot-swappable drive bays, offering a capacity of 6 x HDD or SSD SATA / SAS storage drives per node. In addition, each node features dual M.2 ports (PCIe Gen3 x 4) to support ultra-fast, ultra-dense NVMe flash storage devices. Dual M.2 support is double the capacity of competing products on the market.

Best-In Class Expansion Flexibility

Dual 1GbE LAN ports are integrated into each node as a standard networking option. In addition, each node features 2 x half-length low profile PCIe Gen3 x 16 slots and 1 x OCP Gen3 x 16 mezzanine slot for adding additional expansion options such as high speed networking or RAID storage cards. GIGABYTE delivers best-in class expansion slot options for this form factor.

Easy & Efficient Multi-Node Management

The H261-Z60 features a system-wide Aspeed CMC (Central Management Controller) and LAN module switch, connecting internally to Aspeed BMCs integrated on each node. This results only in one MLAN connection required for management of all four nodes, resulting in less ToR (Top of Rack) cabling and less ports required on your top of rack switch (only one port instead for four required for remote management of all nodes).

Ring Topology Feature for Multi-Server Management

Going a step further, the H261-Z60 also features* the ability to create a “ring” connection for management of all servers in a rack. Only two switch connections are needed, while each server is connected to each other in a chain. The ring will not be broken even if one server in the chain is shut down. This can even further reduce cabling and switch port usage for even greater cost savings and management efficiency.

* Optional Ring Topology Kit must be added

Efficient Power & Cooling

GIGABYTE’s H261-Z60 is designed for not only greater compute density but also with better power and cost efficiency in mind. The system architecture features shared cooling and power for the nodes, with a dual fan wall of 8 (4 x 2) easy swap fans and 2 x 2200W redundant PSUs. In addition, the nodes connect directly to the system backplane with GIGABYTE’s Direct Board Connection Technology, resulting in less cabling and improved airflow for better cooling efficiency.

0 comments:

Post a Comment